“Alexa play music.” “Sorry, I don’t know the answer to your question.”

“Alexa play music.” “Sorry, I don’t know the answer to your question.”

“Alexa play music.” “Sorry, I don’t know the answer to your question.”

This—or some variation of it—is what happens to my mom whenever she interacts with her Amazon Echo.

And it’s not just Alexa. Every voice-enabled device has trouble understanding accents, whether you’re using Siri, Google Home, Microsoft Cortana, or others. As more devices become voice-enabled, the companies developing them are failing to use diverse enough data to train these systems to be inclusive for all users.

There are about 56 million Latinos living in the U.S., making us the largest minority population in the country. And according to a PwC survey, Latinos as a group are earlier tech adopters than any other. Yet existing devices don’t fully support Spanish or Spanish accents.

If companies care about Latinos, who are predicted to have $1.7 trillion in buying power by 2020, according to The Selig Center for Economic Growth, they should be doing a lot more to ensure their products are fully usable for them. Also there are more and more Latinos graduating from computer science courses. More than 8 percent of Latinos graduated from Computer Science colleges, according to American Community Survey data.

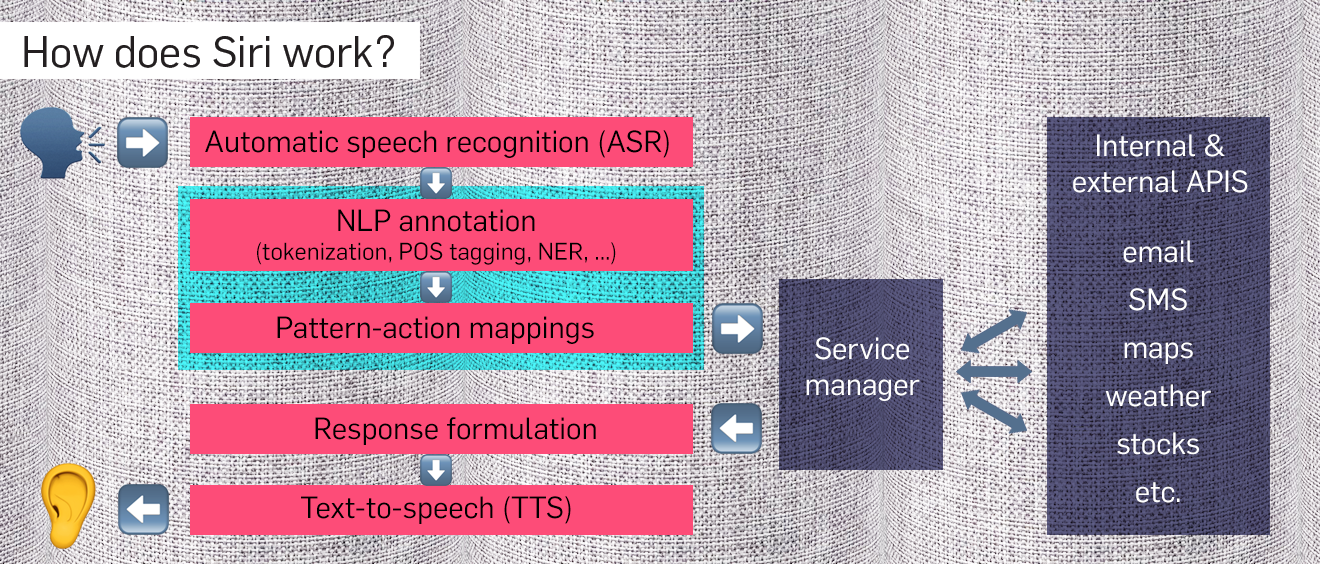

The technology for voice recognition, or ASR (Automatic Speech Recognition), takes an acoustic signal as an audio input, tries to determine which words were actually spoken, and then grabs that list of words as text. Natural Language Processing (NLP) then takes this data and extracts the meaning behind it, which can then be mapped to a phrase or command (for example: “Play Beatles radio”). Most NLP (Watson, API.ai, Wit.ai, etc.) platforms do support multiple languages, so once the words are “extracted,” it should be simple enough to understand their meaning.

To better understand most of the pieces that go into building an ASR system, take a look at the graph above from an NLU course at Stanford. Some audio recognition and cognition programs use speech-to-text then NLP to understand the meaning, others like SoundHound, use Speech-to-Meaning technology, so instead of converting the speech to text first and then interpreting the text, they interpret the speech directly.

So the problem is not the NLP, but converting the sounds into words. Startups and academics use an existing collection of audio and transcripts to train their voice-enabled systems. So if this training data doesn’t contain voices with diverse accents, those systems will have a hard time understanding my mom, or anyone else with an accent.

Bigger companies like Amazon and Google use their own training data, but while they use more diverse audio, it’s still not good enough to understand different accents. Alexa has the ability to train its system using your own voice, which is definitely a good way to improve the experience over time.

But the problem is that companies don’t share the training sets they collect, so while one device might understand my mom’s accent better than others, it won’t solve the problem for all voice-enabled devices—or for all different accents.

Until companies start using bigger data sets of diverse accents and start opening up their data to other companies, we will have to repeat the same question over and over—or ask someone without an accent to play that song for us.