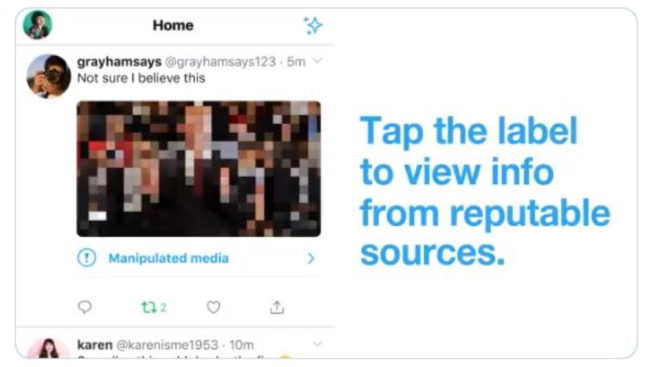

Removing content containing synthetic and manipulated media, widely referred to as deepfakes, from Twitter altogether is still reserved for the most extreme cases, but people will soon start seeing more warning labels over blurred thumbnails and more explanation and clarification, the social network said Tuesday.

Twitter head of site integrity Yoel Roth and group product manager Ashita Achuthan revealed the social network’s new policies in a blog post, following the company’s release of potential rules last November and subsequent intake of public feedback.

The

WORK SMARTER - LEARN, GROW AND BE INSPIRED.

Subscribe today!

To Read the Full Story Become an Adweek+ Subscriber

Already a member? Sign in