Facebook Introduces New Suicide Prevention Tools

Facebook introduced new suicide prevention tools to help users who may be thinking about suicide, as well as their friends and family

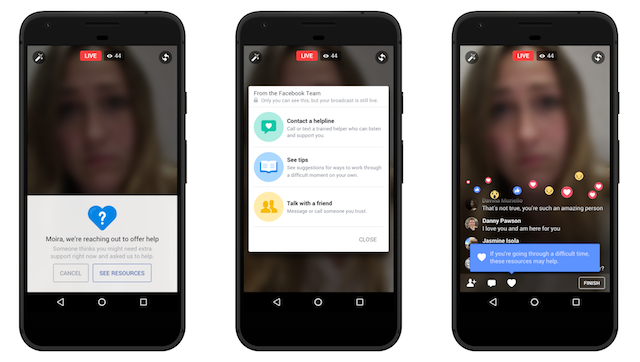

Facebook introduced new suicide prevention tools and resources, aimed at helping users who may be thinking about suicide, as well as their friends and family members.

Facebook already offers a number of suicide prevention tools, such as allowing users to report concerning posts. Now, the platform’s suicide prevention tools have been integrated into Facebook Live.

With this release, when users are watching a Facebook Live video, and the broadcaster seems to need help, viewers will have the option to report the video for its connection to “suicide or self-injury.” The reporter

WORK SMARTER - LEARN, GROW AND BE INSPIRED.

Subscribe today!

To Read the Full Story Become an Adweek+ Subscriber

Already a member? Sign in